OpenStack is a Cloud Operating System that provide the large pool of compute, storage, networking resources throughout the system and data-center of Cloud environment. OpenStack provide the RESTful API to manage all the resources, authentication, pricing and provisioning.

Administrator and end user have Web-Based Dashboard to control and facilitate and empower the user experience.

OpenStack components provide the orchestration, fault tolerance and services managements functionalities to enhance the Cloud functionalities

|

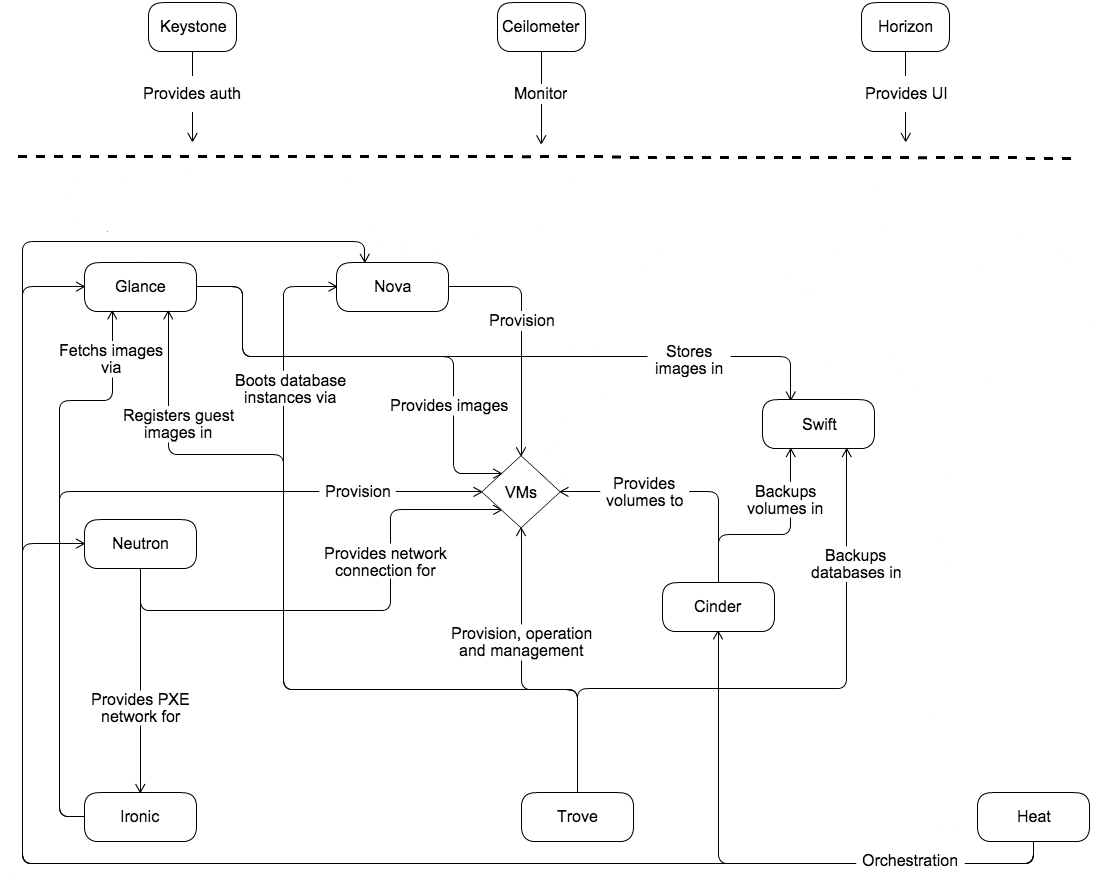

| 1-OpenStack Map |

Components of OpenStack Cloud Operating System

OpenStack consists with the following components that work together to work the entire system correctly. These components are divided into further categories according to their workflow.

i- Conceptual Architecture

|

| 2-Conceptual Architecture of OpenStack |

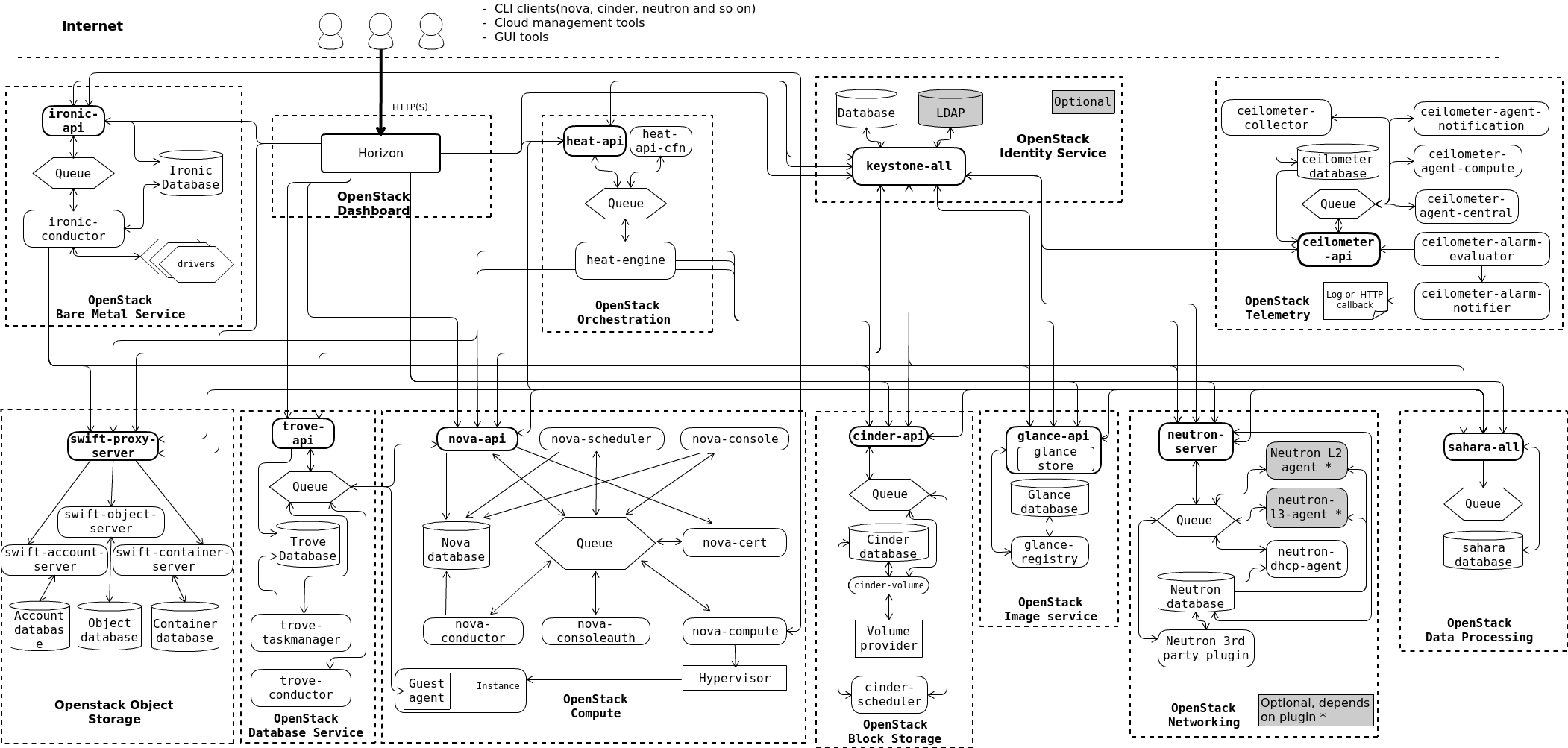

ii- Logical Architecture

Administrator must understand the logical architecture of OpenStack logical architecture before install, deploy and configuration. Conceptual Architecture shows the independent part of OpenStack services/components. User must be authenticated to use these services through special identity service. OpenStack API provide these services and special privilege to administrator.

All the processes and operation are done by API, every service have at least one API process. AMPQ broker is used for communication between process and services and every state is stored in database for log maintenance.

End-User can access OpenStack via web-based interface implemented using HORIZON dashboard component, we can also use command line tool to use OpenStack Cloud. All the operations are done by REST API.

|

| 3-Logical Architecture of OpenStack |

1 - NOVA

Nova OpenStack Cloud Computing component provide on-demand access to compute resources within Virtual Machines(VMs), Nova OpenStack manage large networks resources and provide on-demand access to compute resources.

Nova aka Compute as name implies is highly scale able, fault management, self-service to Operating System (Virtual Machines), containers and Servers (Bare Metal). Nova works on all types of linux operating systems like host or guest (Ubuntu, VMware, Xen, Hyper-V hypervisor and KVM) virtualization technologies.

Nova is a core component of OpenStack Platform. Nova interact with Virtual Machines(VMs), and other components TROVE, HEAT, and SAHARA interact with NOVA to Boots Database Instance, Orchestration and Boots Data Processing Instances respectively.

2 - GLANCE

Glance OpenStack discover, register, and retrieve disk and server images, is an image service. All the Virtual Images are stored in this repository, Glance have RESTful API to querying the VM images metadata and actual images for repository. Using Glance service, images are available from simple file system to Object file system such as Swift(OpenStack component).

|

| 4-Glance Architecture |

3 - SAHARA

Sahara is the provision of data processing frameworks on OpenStack such as Apache Hadoop, Apache Storm and Apache Spark. This framework is configured by specifying the version, cluster topology, and node hardware details etc. Sahara-OpenStack has own architecture, Roadmap and Rationale.

|

| 5-Sahara Architecture |

Sahara architecture consists the following components:

- Sahara pages

- Python Sahara Client

- RESTful API

- Auth component

- DAL - Data Access Layer

- Secure Storage access layer

- Provisioning Engine

- Vendor Plugins

- EDP - Elastic Data Processing

4 - SWIFT

Swift is an OpenStack component to store Object/blob Data, highly available and distributed, swift can store lot of data efficiently, safely and cheaply. Swift(Object Storage) is used for redundant, scalable follow the server clustering mechanism to store data in petabytes. Swift provides long term storage for large amount of data, distributed architecture with no central point, redundancy, great performance, and scalability. Swift insure the redundancy and integrity of data between multiple hardware devices. API is used to perform the certain operations.

Cinder provide replication, consistency and snapshot management. Physical Storage device can be attached to Cinder Server Node or third party storage vendor can also be used with the help of Cinder's plug-in architecture.

sudo mysql -u root -p

CREATE DATABASE glance; GRANT ALL PRIVILEGES ON glance.* TO 'glance'@'localhost' \ IDENTIFIED BY 'MINE_PASS'; GRANT ALL PRIVILEGES ON glance.* TO 'glance'@'%' \ IDENTIFIED BY 'MINE_PASS'; exit

source ~/keystonerc_admin

openstack user create --domain default --password-prompt glance

openstack role add --project service --user glance admin

openstack service create --name glance \ --description "OpenStack Image" image

openstack endpoint create --region RegionOne \ image public http://controller:9292 openstack endpoint create --region RegionOne \ image internal http://controller:9292 openstack endpoint create --region RegionOne \ image admin http://controller:9292

sudo apt install glance

sudo vi /etc/glance/glance-api.conf #Configure the DB connection [database] connection = mysql+pymysql://glance:MINE_PASS@controller/glance #Tell glance how to get authenticated via keystone. Every time a service needs to do something it needs to be authenticated via keystone. [keystone_authtoken] auth_uri = http://controller:5000 auth_url = http://controller:35357 memcached_servers = controller:11211 auth_type = password project_domain_name = default user_domain_name = default project_name = service username = glance password = MINE_PASS #(Comment out or remove any other options in the [keystone_authtoken] section.) [paste_deploy] flavor = keystone #Glance can store images in different locations. We are using file for now [glance_store] stores = file,http default_store /[= file filesystem_store_datadir = /var/lib/glance/images/

sudo vi /etc/glance/glance-registry.conf #Configure the DB connection [database] connection = mysql+pymysql://glance:MINE_PASS@controller/glance #Tell glance-registry how to get authenticated via keystone. [keystone_authtoken] auth_uri = http://controller:5000 auth_url = http://controller:35357 memcached_servers = controller:11211 auth_type = password project_domain_name = default user_domain_name = default project_name = service username = glance password = MINE_PASS #(Comment out or remove any other options in the [keystone_authtoken] section.) #No Idea just use it. [paste_deploy] flavor = keystone

sudo su -s /bin/sh -c "glance-manage db_sync" glance

sudo service glance-registry restart sudo service glance-api restart

wget http://download.cirros-cloud.net/0.3.4/cirros-0.3.4-x86_64-disk.img

source ~/keystonerc_admin

openstack image create "cirros" \ --file cirros-0.3.4-x86_64-disk.img \ --disk-format qcow2 --container-format bare \ --public

openstack image list +--------------------------------------+--------+--------+ | ID | Name | Status | +--------------------------------------+--------+--------+ | d5edb2b0-ad3c-453a-b66d-5bf292dc2ee8 | cirros | active | +--------------------------------------+--------+--------+

@controller

source ~/keystonerc_admin

openstack compute service list +----+------------------+-----------------------+----------+---------+-------+----------------------------+ | ID | Binary | Host | Zone | Status | State | Updated At | +----+------------------+-----------------------+----------+---------+-------+----------------------------+ | 3 | nova-consoleauth | controller | internal | enabled | up | 2016-11-30T12:54:39.000000 | | 4 | nova-scheduler | controller | internal | enabled | up | 2016-11-30T12:54:36.000000 | | 5 | nova-conductor | controller | internal | enabled | up | 2016-11-30T12:54:34.000000 | | 6 | nova-compute | compute1 | nova | enabled | up | 2016-11-30T12:54:33.000000 | +----+------------------+-----------------------+----------+---------+-------+----------------------------+

5 - NEURON

Neutron provide the Network Connectivity between different components/services of the integrated OpenStack components. Neutron enhance the networking topologies and provide advance network polices such as multi-tier web application topology, advance network capabilities such as L2 and L3 tunneling to avoid limitations of VLAN.6 - CINDER

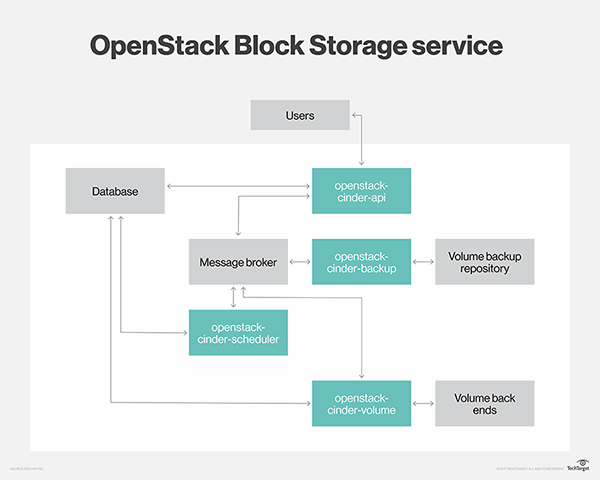

Cinder is an Cloud Computing persistent data storage software, used in OpenStack to store data in Block Storage. Cinder provides API to use its features without knowing about the type and location. Cinder store data in Stand alone OpenStack services as well as Guest Virtual Machines such as instances.Cinder provide replication, consistency and snapshot management. Physical Storage device can be attached to Cinder Server Node or third party storage vendor can also be used with the help of Cinder's plug-in architecture.

|

| 6-Block Storage API with other OpenStack services |

General Steps for configuring OpenStack Components

- Create Component DB

- Give DB rights to component user

- Create Component User and Assign role

- Create Component Service(s)

- Create Component Endpoints

- Install software component

- Configure config file

- Initialize component DB

- Start the service

All the component/projects of OpenStack follow the same process for installing them.

Basic Setup for Deploying OpenStack

1- KeyStone

Keystone is installed in Controller Node and it's provide all identity services like username and passwords to all customers.

@Controller

Login to MariaDB

sudo mysql -u root -p

CREATE DATABASE keystone;

GRANT ALL PRIVILEGES ON keystone.* TO 'keystone'@'localhost' \

IDENTIFIED BY 'MINE_PASS';

GRANT ALL PRIVILEGES ON keystone.* TO 'keystone'@'%' \

IDENTIFIED BY 'MINE_PASS';

exit

sudo apt install keystone

sudo vi /etc/keystone/keystone.conf

#Tell keystone how to access the DB

[database]

connection = mysql+pymysql://keystone:MINE_PASS@controller/keystone (Comment out the exiting connection entry)

#Some token management I don’t fully understand. But put it in, its important)

[token]

provider = fernet

sudo su -s /bin/sh -c "keystone-manage db_sync" keystone

sudo keystone-manage fernet_setup --keystone-user keystone --keystone-group keystone

sudo keystone-manage credential_setup --keystone-user keystone --keystone-group keystone

sudo keystone-manage bootstrap --bootstrap-password MINE_PASS \

--bootstrap-admin-url http://controller:35357/v3/ \

--bootstrap-internal-url http://controller:35357/v3/ \

--bootstrap-public-url http://controller:5000/v3/ \

--bootstrap-region-id RegionOne

sudo vi /etc/apache2/apache2.conf

ServerName controller

sudo service apache2 restart

sudo rm -f /var/lib/keystone/keystone.db

sudo vi ~/keystone_admin

export OS_USERNAME=admin

export OS_PASSWORD=MINE_PASS

export OS_PROJECT_NAME=admin

export OS_USER_DOMAIN_NAME=default

export OS_PROJECT_DOMAIN_NAME=default

export OS_AUTH_URL=http://controller:35357/v3

export OS_IDENTITY_API_VERSION=3

export PS1='[\u@\h \W(keystone_admin)]$ '

source ~/keystone_admin

openstack project create --domain default \

--description "Service Project" service

openstack --os-auth-url http://controller:35357/v3 \

--os-project-domain-name default --os-user-domain-name default \

--os-project-name admin --os-username admin token issue

Password:

+------------+-----------------------------------------------------------------+

| Field | Value |

+------------+-----------------------------------------------------------------+

| expires | 2016-11-30 13:05:15+00:00 |

| id | gAAAAABYPsB7yua2kfIZhoDlm20y1i5IAHfXxIcqiKzhM9ac_MV4PU5OPiYf_ |

| | m1SsUPOMSs4Bnf5A4o8i9B36c-gpxaUhtmzWx8WUVLpAtQDBgZ607ReW7cEYJGy |

| | yTp54dskNkMji-uofna35ytrd2_VLIdMWk7Y1532HErA7phiq7hwKTKex-Y |

| project_id | b1146434829a4b359528e1ddada519c0 |

| user_id | 97b1b7d8cb0d473c83094c795282b5cb |

+------------+-----------------------------------------------------------------+

2- Glance

Image Service that store all VMs images for customers and resides in Controller node

@Controller

CREATE DATABASE glance; GRANT ALL PRIVILEGES ON glance.* TO 'glance'@'localhost' \ IDENTIFIED BY 'MINE_PASS'; GRANT ALL PRIVILEGES ON glance.* TO 'glance'@'%' \ IDENTIFIED BY 'MINE_PASS'; exit

source ~/keystonerc_admin

openstack user create --domain default --password-prompt glance

openstack role add --project service --user glance admin

openstack service create --name glance \ --description "OpenStack Image" image

openstack endpoint create --region RegionOne \ image public http://controller:9292 openstack endpoint create --region RegionOne \ image internal http://controller:9292 openstack endpoint create --region RegionOne \ image admin http://controller:9292

sudo apt install glance

sudo vi /etc/glance/glance-api.conf #Configure the DB connection [database] connection = mysql+pymysql://glance:MINE_PASS@controller/glance #Tell glance how to get authenticated via keystone. Every time a service needs to do something it needs to be authenticated via keystone. [keystone_authtoken] auth_uri = http://controller:5000 auth_url = http://controller:35357 memcached_servers = controller:11211 auth_type = password project_domain_name = default user_domain_name = default project_name = service username = glance password = MINE_PASS #(Comment out or remove any other options in the [keystone_authtoken] section.) [paste_deploy] flavor = keystone #Glance can store images in different locations. We are using file for now [glance_store] stores = file,http default_store /[= file filesystem_store_datadir = /var/lib/glance/images/

sudo vi /etc/glance/glance-registry.conf #Configure the DB connection [database] connection = mysql+pymysql://glance:MINE_PASS@controller/glance #Tell glance-registry how to get authenticated via keystone. [keystone_authtoken] auth_uri = http://controller:5000 auth_url = http://controller:35357 memcached_servers = controller:11211 auth_type = password project_domain_name = default user_domain_name = default project_name = service username = glance password = MINE_PASS #(Comment out or remove any other options in the [keystone_authtoken] section.) #No Idea just use it. [paste_deploy] flavor = keystone

sudo su -s /bin/sh -c "glance-manage db_sync" glance

sudo service glance-registry restart sudo service glance-api restart

wget http://download.cirros-cloud.net/0.3.4/cirros-0.3.4-x86_64-disk.img

source ~/keystonerc_admin

openstack image create "cirros" \ --file cirros-0.3.4-x86_64-disk.img \ --disk-format qcow2 --container-format bare \ --public

openstack image list +--------------------------------------+--------+--------+ | ID | Name | Status | +--------------------------------------+--------+--------+ | d5edb2b0-ad3c-453a-b66d-5bf292dc2ee8 | cirros | active | +--------------------------------------+--------+--------+

3- Nova

Nova is responsible for VM instances created from VM images to use any time. It's like a CD that can be used when ever want to install system. Nova resides in more than one machine like Controller and Compute node and do different things like management and communication of with other OpenStack services.

@Controller

sudo mysql -u root -p

CREATE DATABASE nova_api;

CREATE DATABASE nova;

GRANT ALL PRIVILEGES ON nova_api.* TO 'nova'@'localhost' \

IDENTIFIED BY 'MINE_PASS';

GRANT ALL PRIVILEGES ON nova_api.* TO 'nova'@'%' \

IDENTIFIED BY 'MINE_PASS';

GRANT ALL PRIVILEGES ON nova.* TO 'nova'@'localhost' \

IDENTIFIED BY 'MINE_PASS';

GRANT ALL PRIVILEGES ON nova.* TO 'nova'@'%' \

IDENTIFIED BY 'MINE_PASS';

exit

source ~/keystonerc_admin

openstack user create --domain default \

--password-prompt nova

openstack role add --project service --user nova admin

openstack service create --name nova \

--description "OpenStack Compute" compute

openstack endpoint create --region RegionOne \

compute public http://controller:8774/v2.1/%\(tenant_id\)s

openstack endpoint create --region RegionOne \

compute internal http://controller:8774/v2.1/%\(tenant_id\)s

openstack endpoint create --region RegionOne \

compute admin http://controller:8774/v2.1/%\(tenant_id\)s

sudo apt install nova-api nova-conductor nova-consoleauth \

nova-novncproxy nova-scheduler

sudo vi /etc/nova/nova.conf

#Configure the DB-1 access

[api_database]

connection = mysql+pymysql://nova:MINE_PASS@controller/nova_api

#Configure the DB-2 access (nova has 2 DBs)

[database]

connection = mysql+pymysql://nova:MINE_PASS@controller/nova

[DEFAULT]

#Configure how to access RabbitMQ

transport_url = rabbit://openstack:MINE_PASS@controller

#Use the below. Some details will follow later

auth_strategy = keystone

my_ip = 10.30.100.215

use_neutron = True

firewall_driver = nova.virt.firewall.NoopFirewallDriver

#Tell Nova how to access keystone

[keystone_authtoken]

auth_uri = http://controller:5000

auth_url = http://controller:35357

memcached_servers = controller:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = nova

password = MINE_PASS

#This is a remote access to instance consoles (French ? Just take it on faith. We will explore this in a much later episode)

[vnc]

vncserver_listen = $my_ip

vncserver_proxyclient_address = $my_ip

#Nova needs to talk to glance to get the images

[glance]

api_servers = http://controller:9292

#Some locking mechanism for message queing (Just use it.)

[oslo_concurrency]

lock_path = /var/lib/nova/tmp

sudo su -s /bin/sh -c "nova-manage api_db sync" nova

sudo su -s /bin/sh -c "nova-manage db sync" nova

sudo service nova-api restart

sudo service nova-consoleauth restart

sudo service nova-scheduler restart

sudo service nova-conductor restart

sudo service nova-novncproxy restart

@compute1

sudo apt install nova-compute

sudo vi /etc/nova/nova.conf

[DEFAULT]

#Define DB access

transport_url = rabbit://openstack:MINE_PASS@controller

#Take it on faith for now

auth_strategy = keystone

my_ip = 10.30.100.213

use_neutron = True

firewall_driver = nova.virt.firewall.NoopFirewallDriver

#Tell the nova-compute how to access keystone

[keystone_authtoken]

auth_uri = http://controller:5000

auth_url = http://controller:35357

memcached_servers = controller:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = nova

password = MINE_PASS

#This is a remote access to instance consoles (French ? Just take it on faith. We will explore this in a much later episode)

[vnc]

enabled = True

vncserver_listen = 0.0.0.0

vncserver_proxyclient_address = $my_ip

novncproxy_base_url = http://controller:6080/vnc_auto.html

#Nova needs to talk to glance to get the images

[glance]

api_servers = http://controller:9292

#Some locking mechanism for message queuing (Just use it.)

[oslo_concurrency]

lock_path = /var/lib/nova/tmp

sudo vi /etc/nova/nova-compute.conf

[libvirt]

virt_type = qemu

sudo service nova-compute restart

source ~/keystonerc_admin

openstack compute service list +----+------------------+-----------------------+----------+---------+-------+----------------------------+ | ID | Binary | Host | Zone | Status | State | Updated At | +----+------------------+-----------------------+----------+---------+-------+----------------------------+ | 3 | nova-consoleauth | controller | internal | enabled | up | 2016-11-30T12:54:39.000000 | | 4 | nova-scheduler | controller | internal | enabled | up | 2016-11-30T12:54:36.000000 | | 5 | nova-conductor | controller | internal | enabled | up | 2016-11-30T12:54:34.000000 | | 6 | nova-compute | compute1 | nova | enabled | up | 2016-11-30T12:54:33.000000 | +----+------------------+-----------------------+----------+---------+-------+----------------------------+

4- Neutron

Neutron is responsible for the networking part in OpenStack, There is two types of networks External(Usually configured once and used by OS to the external World) and Tenant Networks(These are assigned to customers), OpenStack also requires virtual switching and two components mostly used for this purpose(vSwitch and Linux Bridge).

@Controller

sudo mysql -u root -p

CREATE DATABASE neutron;

GRANT ALL PRIVILEGES ON neutron.* TO 'neutron'@'localhost' \

IDENTIFIED BY 'MINE_PASS';

GRANT ALL PRIVILEGES ON neutron.* TO 'neutron'@'%' \

IDENTIFIED BY 'MINE_PASS';

exit

source ~/keystonerc_admin

openstack user create --domain default --password-prompt neutron

openstack role add --project service --user neutron admin

openstack service create --name neutron \

--description "OpenStack Networking" network

openstack endpoint create --region RegionOne \

network public http://controller:9696

openstack endpoint create --region RegionOne \

network internal http://controller:9696

openstack endpoint create --region RegionOne \

network admin http://controller:9696

sudo apt install neutron-server neutron-plugin-ml2

sudo vi /etc/neutron/neutron.conf

[DEFAULT]

#This is a multi-layered plugin

core_plugin = ml2

service_plugins = router

allow_overlapping_ips = True

notify_nova_on_port_status_changes = True

notify_nova_on_port_data_changes = True

[DATABASE]

#Configure the DB connection

connection = mysql+pymysql://neutron:MINE_PASS@controller/neutron

[keystone_authtoken]

#Tell neutron how to talk to keystone

auth_uri = http://controller:5000

auth_url = http://controller:35357

memcached_servers = controller:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = neutron

password = MINE_PASS

[nova]

#Tell neutron how to talk to nova to inform nova about changes in the network

auth_url = http://controller:35357

auth_type = password

project_domain_name = default

user_domain_name = default

region_name = RegionOne

project_name = service

username = nova

password = MINE_PASS

sudo vi /etc/neutron/plugins/ml2/ml2_conf.ini

[ml2]

#In our environment we will use vlan networks so the below setting is sufficient. You could also use vxlan and gre, but that is for a later episode

type_drivers = flat,vlan

#Here we are telling neutron that all our customer networks will be based on vlans

tenant_network_types = vlan

#Our SDN type is openVSwitch

mechanism_drivers = openvswitch,l2population

extension_drivers = port_security

[ml2_type_flat]

#External network is a flat network

flat_networks = external

[ml2_type_vlan]

#This is the range we want to use for vlans assigned to customer networks.

network_vlan_ranges = external,vlan:1381:1399

[securitygroup]

#Use Ip tables based firewall

firewall_driver = iptables_hybrid

sudo su -

su -s /bin/sh -c "neutron-db-manage --config-file /etc/neutron/neutron.conf \

--config-file /etc/neutron/plugins/ml2/ml2_conf.ini upgrade head" neutron

sudo servive neutron-* restart

sudo vi /etc/nova/nova.conf

#Tell nova how to get in touch with neutron, to get network updates

[neutron]

url = http://controller:9696

auth_url = http://controller:35357

auth_type = password

project_domain_name = default

user_domain_name = default

region_name = RegionOne

project_name = service

username = neutron

password = MINE_PASS

service_metadata_proxy = True

metadata_proxy_shared_secret = MINE_PASS

sudo service nova-* restart

sudo apt install neutron-plugin-ml2 \

neutron-l3-agent neutron-dhcp-agent \

neutron-metadata-agent neutron-openvswitch-agent

sudo ovs-vsctl add-br br-ex

sudo ovs-vsctl add-port br-ex ens10

sudo ovs-vsctl add-br br-vlan

sudo ovs-vsctl add-port br-vlan ens9

sudo vi /etc/network/interfaces

# This file describes the network interfaces available on your system

# and how to activate them. For more information, see interfaces(5).

source /etc/network/interfaces.d/*

# The loopback network interface

# No Change

auto lo

iface lo inet loopback

#No Change on management network

auto ens3

iface ens3 inet static

address 10.30.100.216

netmask 255.255.255.0

# Add the br-vlan bridge

auto br-vlan

iface br-vlan inet manual

up ifconfig br-vlan up

# Configure ens9 to work with OVS

auto ens9

iface ens9 inet manual

up ip link set dev $IFACE up

down ip link set dev $IFACE down

# Add the br-ex bridge and move the IP for the external network to the bridge

auto br-ex

iface br-ex inet static

address 172.16.8.216

netmask 255.255.255.0

gateway 172.16.8.254

dns-nameservers 8.8.8.8

# Configure ens10 to work with OVS. Remove the IP from this interface

auto ens10

iface ens10 inet manual

up ip link set dev $IFACE up

down ip link set dev $IFACE down

sudo reboot

sudo vi /etc/neutron/neutron.conf

[DEFAULT]

auth_strategy = keystone

#Tell neutron how to access RabbitMQ

transport_url = rabbit://openstack:MINE_PASS@controller

#Tell neutron how to access keystone

[keystone_authtoken]

auth_uri = http://controller:5000

auth_url = http://controller:35357

memcached_servers = controller:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = neutron

password = MINE_PASS

sudo vi /etc/neutron/plugins/ml2/openvswitch_agent.ini

#Configure the section for OpenVSwitch

[ovs]

#Note that we are mapping alias(es) to the bridges. Later we will use these aliases (vlan,external) to define networks inside OS.

bridge_mappings = vlan:br-vlan,external:br-ex

[agent]

l2_population = True

[securitygroup]

#Ip table based firewall

firewall_driver = iptables_hybrid

sudo vi /etc/neutron/l3_agent.ini

[DEFAULT]

#Tell the agent to use the OVS driver

interface_driver = neutron.agent.linux.interface.OVSInterfaceDriver

#This is required to be set like this by the official documentation (If you don’t set it to empty as show below, sometimes your router ports in OS will not become Active)

external_network_bridge =

sudo vi /etc/neutron/dhcp_agent.ini

[DEFAULT]

#Tell the agent to use the OVS driver

interface_driver = neutron.agent.linux.interface.OVSInterfaceDriver

enable_isolated_metadata = True

sudo vi /etc/neutron/metadata_agent.ini

[DEFAULT]

nova_metadata_ip = controller

metadata_proxy_shared_secret = MINE_PASS

sudo service neutron-* restart

@compute1

sudo apt install neutron-plugin-ml2 \

neutron-openvswitch-agent

sudo ovs-vsctl add-br br-vlan

sudo ovs-vsctl add-port br-vlan ens9

sudo vi /etc/network/interfaces

# This file describes the network interfaces available on your system

# and how to activate them. For more information, see interfaces(5).

source /etc/network/interfaces.d/*

# The loopback network interface

#No Change

auto lo

iface lo inet loopback

#No Change to management network

auto ens3

iface ens3 inet static

address 10.30.100.213

netmask 255.255.255.0

# Add the br-vlan bridge interface

auto br-vlan

iface br-vlan inet manual

up ifconfig br-vlan up

#Configure ens9 to work with OVS

auto ens9

iface ens9 inet manual

up ip link set dev $IFACE up

down ip link set dev $IFACE down

sudo reboot

sudo vi /etc/neutron/neutron.conf

[DEFAULT]

auth_strategy = keystone

#Tell neutron component how to access RabbitMQ

transport_url = rabbit://openstack:MINE_PASS@controller

#Configure access to keystone

[keystone_authtoken]

auth_uri = http://controller:5000

auth_url = http://controller:35357

memcached_servers = controller:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = neutron

password = MINE_PASS

sudo vi /etc/nova/nova.conf

#Tell nova how to access neutron for network topology updates

[neutron]

url = http://controller:9696

auth_url = http://controller:35357

auth_type = password

project_domain_name = default

user_domain_name = default

region_name = RegionOne

project_name = service

username = neutron

password = MINE_PASS

sudo vi /etc/neutron/plugins/ml2/openvswitch_agent.ini

#Here we are mapping the alias vlan to the bridge br-vlan

[ovs]

bridge_mappings = vlan:br-vlan

[agent]

l2_population = True

[securitygroup]

firewall_driver = iptables_hybrid

sudo reboot

sudo service neutron-* restart

source ~/keystonerc_admin

openstack network agent list

+--------------------------------------+--------------------+-----------------------+-------------------+-------+-------+---------------------------+

| ID | Agent Type | Host | Availability Zone | Alive | State | Binary |

+--------------------------------------+--------------------+-----------------------+-------------------+-------+-------+---------------------------+

| 84d81304-1922-47ef-8b8e-c49f83cff911 | Metadata agent | neutron | None | True | UP | neutron-metadata-agent |

| 93741a55-54af-457e-b182-92e15d77b7ae | L3 agent | neutron | None | True | UP | neutron-l3-agent |

| a3c9c1e5-46c3-4649-81c6-dc4bb9f35158 | Open vSwitch agent | neutron | None | True | UP | neutron-openvswitch-agent |

| ba9ce5bb-6141-4fcc-9379-c9c20173c382 | DHCP agent | neutron | nova | True | UP | neutron-dhcp-agent |

| e458ba8a-8272-43bb-bb83-ca0aae48c22a | Open vSwitch agent | compute1 | None | True | UP | neutron-openvswitch-agent |

+--------------------------------------+--------------------+-----------------------+-------------------+-------+-------+---------------------------+

5- Horizon

Graphical User Interface for OpenStack resides in Controller node.

@controller

sudo apt install openstack-dashboard

sudo vi /etc/openstack-dashboard/local_settings.py

OPENSTACK_HOST = "controller"

ALLOWED_HOSTS = ['*', ]

SESSION_ENGINE = 'django.contrib.sessions.backends.cache'

CACHES = {

'default': {

'BACKEND': 'django.core.cache.backends.memcached.MemcachedCache',

'LOCATION': 'controller:11211',

}

}

OPENSTACK_KEYSTONE_URL = "http://%s:5000/v3" % OPENSTACK_HOST

OPENSTACK_KEYSTONE_MULTIDOMAIN_SUPPORT = True

OPENSTACK_API_VERSIONS = {

"identity": 3,

"image": 2,

"volume": 2,

}

OPENSTACK_KEYSTONE_DEFAULT_DOMAIN = "default"

OPENSTACK_KEYSTONE_DEFAULT_ROLE = "user"

TIME_ZONE = "TIME_ZONE"

sudo service apache2 reload

0 Comments